Our organization has been seeing its business growing year over year and we found ourselves hosting AWS workloads in many peered VPCs spread across several accounts. Managing routing and overall network connectivity for these workloads has become a nightmare for all involved parties. If you are a technology leader looking to expand your cloud adoption, you will definitely want to read on!

In this blog post, we’ll show you how we built a Global Network architecture that facilitates interconnecting our workloads, regardless where they are hosted, using AWS Cloud WAN.

Addressing pain points

We started by designing a new network architecture, with the help of our AWS partners, meant to address the following pain points we were experiencing:

- Private IP address management using an on-premise IPAM solution is tedious and error-prone.

- Managing cross-VPC connectivity using VPC peering does not scale well.

- Performing network segmentation using VPC resources is convoluted.

- Data transfer costs optimization opportunities are limited.

IPAM pools

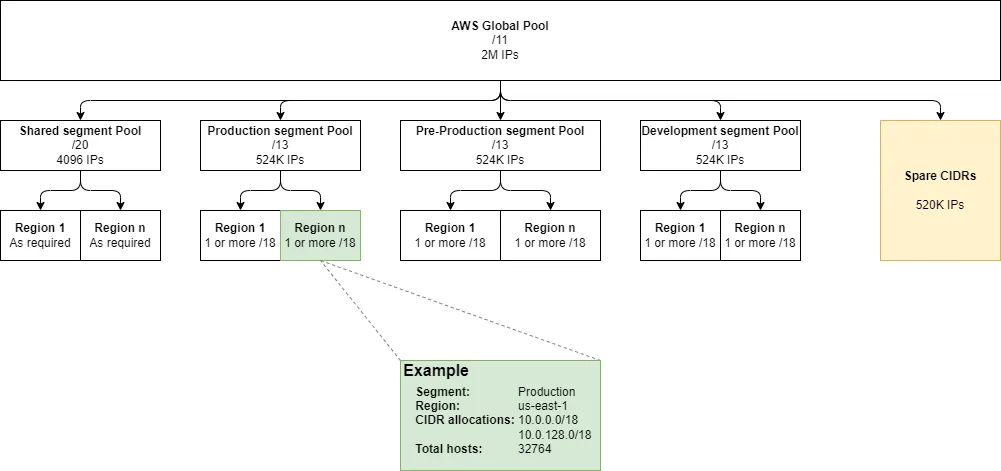

We addressed the first pain point rather easily by building IPAM pools, using AWS IP Address Manager. Here is a high-level diagram showing the breakdown of the IP address space into IPAM pools:

AWS Global pool

The top-level pool represents the CIDR block(s) associated with the Global Network. This represents all of our AWS private IP space. We started by allocating a /11 CIDR which provides a pool of over 2 million IPs. This is 3 orders of magnitude larger than our current private IP address usage in our AWS Organization so there is plenty of room for growth. At the time of writing this article, we would pay a little over $400,000 per month for the management of 2 million actively used IPs. However, we are confident that should this situation occur, revenues for new workloads would compensate for this expense.

Segment pools and regional pools

This top-level pool then feeds into four distinct network segment pools, which are dedicated routing domains. Having per-segment CIDR blocks enables tenants to reference them in their network security rules, regardless of the region they are hosted into. Finally, the segment pools feed into regional pools, each having one or more /18 subnets.

With IPAM in place, we proceeded to use Resource Access Manager (RAM) to share the pools with other principals within our AWS Organization. For example, we shared the Production segment pool with the Production organizational unit (OU). This way, all accounts under this OU can create VPCs using this shared IPAM pool.

Spares!

Have you noticed the Spare CIDRs in the above diagram? If future growth requires expanding the IP space of any of these pools, we can allocate any of these various-sized CIDRs to any of the IPAM pools. There is a total of ~524K spare IPs we can allocate, to accommodate future growth. You can see in the diagram’s example that we allocated two non-contiguous CIDRs to the us-east-1 IPAM pool for the Production segment.

There are significantly fewer IP addresses in the shared segment pools because we want to minimize the number of workloads having cross-segment connectivity. More on that later.

Global Network

We addressed the cross-VPC connectivity pain point by leveraging AWS’ Cloud WAN offering. The Global Network acts as the hub of our network topology while its Edge Locations and VPCs are its spokes. While there is a premium to pay to delegate WAN management to AWS as we’ll cover later, its ease of use is well worth it. Tenants need only to create a Core Network Attachment request for their VPC and tagging the request with the proper NetworkSegment tag value. We use this tag’s value to designate which network segment the VPC will be connected to. Then, all that’s left is approving the attachment request and for the tenant to add routes to the Core Network.

The best part is that all these changes can be implemented as code, using Terraform. Even the Core Network attachment request’s approval! This enables a rigorous audit trail for any changes occurring with the WAN’s network topology. These trails show up in both AWS and in our source control commits. Furthermore, this opens up the possibility to test potentially impactful changes during CI, before applying them.

Network segmentation

Next, we wanted this architecture to facilitate network segmentation between production and non-production workloads. We created distinct segments for production workloads and the various non-production environments by using Cloud WAN’s Network Policies. This way, each segment is its own routing domain and we require each attachment request to be accepted from within the Networking account. That being said, it is possible to perform segment sharing, which allows you to route traffic across segments. We enabled segment sharing only for the Shared segment. We would only host workloads requiring connectivity to all of our VPC-enabled AWS infrastructure in the Shared segment. Security Information and Event Management (SIEM) solutions are a prime example of such a shared workload.

Managed Prefix Lists

As we drafted the Global Network solution, our AWS partners recommended we leverage Managed Prefix Lists (MPLs) instead of directly referencing CIDRs. Indeed, because the CIDR blocks associated with each IPAM pool may evolve over time, we created MPLs for the Global and Segment pool CIDRs. This way, route tables, security groups, etc. can reference these lists instead of the actual CIDR blocks. This is quite advantageous because as CIDRs within the MPL change, any resource referencing the list will immediately be updated! Considering IPAM pools don’t require CIDR block allocations to be contiguous, MPLs help keeping our Terraform codebase DRY and less error-prone.

One drawback to keep in mind is that you need to specify a maximum size, representing the maximum number of CIDRs the MPL can contain. This maximum size is what counts against resource quotas when using MPLs within your resources. This excerpt from the VPC quotas documentation explains this well:

[…] if you create a prefix list with 20 maximum entries and you reference that prefix list in a security group rule, this counts as 20 security group rules.

As for naming convention for the MPLs, we opted to use similar prefix list names to Amazon’s:

- com

- samsungads

globalor theregionname- use case

- (optional) use case layers

Example names we use are com.samsungads.global.ipam.root and com.samsungads.us-east-1.natgw.workload_name. The first example represents the CIDR blocks allocated to the Global Network’s IPAM pool, whereas the second represents the NAT Gateway IP list for a given workload VPC in the us-east-1 region.

MPLs can also be quite useful to reference workload hosts/CIDRs across teams. Such a naming convention makes it easy to discover MPLs by using Terraform data source filters!

Routing

With our VPCs having Core Network Attachments in the Available state, routing traffic into the Global Network is now a breeze. We just create a route table entry where the destination is setup as an MPL, i.e.: com.samsungads.global.ipam.root and the target is the Core Network ARN. Once this new route is in place for two VPCs connected to the Core Network, you could proceed to remove the old VPC peering connections. There you have it, a scalable method to implement cross-VPC connectivity!

There are, however, some situations where VPC peering connections may still be worth maintaining. Consider the following table:

| Data transfer scenario | Using VPC peering connection | Using Cloud WAN |

|---|---|---|

| Intra-VPC + Intra-AZ | Routed locally, free of charge | Routed locally, free of charge |

| Intra-VPC + Cross-AZ | Routed locally, Cross-AZ charges | Routed locally, Cross-AZ charges |

| Cross-VPC in same region and AZ | Free of charge | $0.02/GB |

| Cross-VPC in same region + Cross-AZ traffic | $0.01/GB in both directions | $0.02/GB egress, ingress is free |

| Cross-VPC in different regions | Cross-region charges | $0.02/GB + Cross-region charges |

Have you noticed the $0.02/GB “tax” when using Cloud WAN for cross-VPC traffic? These charges will most definitely add up for high-traffic use cases. Thus, you might want to consider keeping the VPC peering connections for such scenarios or using in-region Shared VPC subnets. AWS typically prioritizes routes that are more specific, so it stands to reason that CIDRs associated to two VPCs would typically have longer lengths than ones associated with IPAM pools. Therefore, even if you have two cross-VPC routes where one flows through Cloud WAN and the other through the VPC peering connection, you can typically avoid the added Cloud WAN cost for high-traffic situations this way.

Shared VPC subnets

Generally, one of the most expensive items on your cloud invoice is your data transfer costs. You may be tempted to host your workloads in few large monolithic VPCs, but this hinders network isolation, quota management and various security considerations. Indeed, not only should you not host monolithic VPCs, but you should not own monolithic accounts either. I previously mentioned using a Networking account: one of its primary raison d’être is to host shared VPCs.

In a nutshell, we create VPCs and its subnets in this account, then use RAM to share the subnets with other principals within our AWS Organization. The account or OU on the receiving end of this RAM share, called the participant, can then deploy resources in the shared subnets. This way, we can co-locate interconnected components of a workload while hosting them in different AWS accounts! We can then reap the benefits of having distinct security controls, help limit the blast radius of adverse events and facilitate cost management across these accounts.

VPC owners and participants have different responsibilities and permission sets for resources within the VPC. For example, participants have no oversight whatsoever on NAT Gateways. However, participants can manage their own Security Groups and reference other participants’ or owner SGs within their own.

Putting it all together

This solution represents our foundation for our VPC-enabled infrastructure. We now have the capability to innovate and scale out without introducing additional network complexity. This Global Network solution addresses all the pain points we were struggling with:

- We can now create VPCs by requesting a CIDR block from AWS IPAM as part of its creation. We can do this without manual intervention and without risking using overlapping CIDRs.

- We require a single Core Network Attachment to provide connectivity (routing) to all other VPCs within the same segment.

- We can easily implement network segmentation by leveraging Cloud WAN’s segmentation feature. Yet, we can host SIEMs, etc. in the Shared segment having routing to all other segments.

- Finally, we can utilize shared VPC subnets to reduce data transfer charges for cross-account VPC-enabled workloads within a given region.

Acknowledgements

I would like to thank Steven Alyekhin, Senior Solutions Architect at AWS for his invaluable assistance in elaborating this solution.